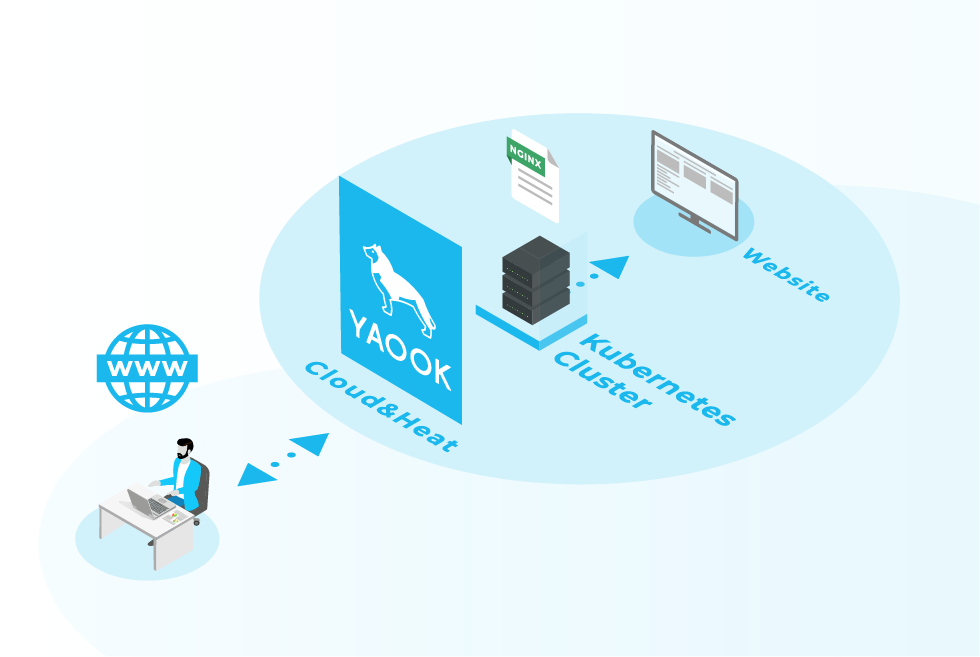

Hi! We are Vama and Lavon, working students at Cloud&Heat Technologies. In this tutorial, we would like to show you how to offer an NGINX webserver using Yaook Kubernetes (yk8s) by Cloud&Heat and Schwarz IT.

You may wonder why it’s so much work to spin up a simple k8s cluster, if there are way more shorter tutorials at hand.

yk8s is clearly not supposed to simply run an NGINX, but instead more complex setups, and for these life cycle management is a very important task. How do I upgrade my k8s, my systems hosting it, how to increase the worker count or migrate some gateways to a different AZ? yk8s is supposed to solve these issues, as at the time of starting that project we couldn’t find tooling to fulfill all our requirements.

These days for example we operate our OpenStack based on that. The code for it is also under the umbrella of the Yaook project so feel free to check that out afterwards! We are also planning to move internal services like GitLab or Nextcloud into yk8s.

yk8s Handbook: https://yaook.gitlab.io/k8s

Legend

- Lines prefixed with

$are commands to be executed - Lines prefixed with

#are comments - Lines surrounded by

<>need to be computed by your brain and replaced accordingly

What do we need?

- Access to an OpenStack cloud with the following resources available

- Ubuntu 18.04 or 20.04 and Debian 10 or 11 images

- The login to the Ubuntu instance needs to be

ubuntu - The login to the Debian instance needs to be

debian

- The login to the Ubuntu instance needs to be

- At least 3 VMs need to be able to spawn

- Flavors

- 1xXS (RAM: 1GB, vCPUs: 1, Disk: 10GB)

- 2xM (RAM: 4GB; vCPUs: 2; Disk: 25GB)

- Network

- 1 project network needs to be able to be created

- 1 project router needs to be able to be created

- 1 provider network for access to the internet needs to be available

- at least 2 floating IPs allocatable, 10 ports in total will be created

- A SSH key configured to access spawned instances and the name of that key known to you

- Ubuntu 18.04 or 20.04 and Debian 10 or 11 images

- A Unix shell environment for running the tutorial (called workstation) Note: The tutorial is based on Ubuntu 20.04

- The link to the FAQ in case you hit trouble: https://yaook.gitlab.io/k8s/faq.html

- A way to connect to us in case the FAQ can’t help, we are in #yaook on oftc via IRC: https://webchat.oftc.net/?channels=yaook

Prepare the workstation

We begin with the packages required to be installed (remember it’s Ubuntu 20.04 based):$ sudo apt install

python3-pip

python3-venv

python3-toml

moreutils

jq

wireguard

pass

direnv

Install Terraform

Terraform allows infrastructure to be expressed as code in a simple, human readable language called HCL (HashiCorp Configuration Language). It reads configuration files and provides an execution plan of changes, which can be reviewed for safety and then applied and provisioned. To install Terraform, we run these commands, taken from https://www.terraform.io/downloads$ curl -fsSL https://apt.releases.hashicorp.com/gpg | sudo apt-key add -

$ sudo apt-add-repository "deb [arch=amd64] https://apt.releases.hashicorp.com $(lsb_release -cs) main"

$ sudo apt-get update && sudo apt-get install terraform

Install Helm

Helm is the package manager for Kubernetes. It is used to build Helm charts, which are packages of Kubernetes resources that are used to deploy apps to a cluster. Please follow the install instructions on https://helm.sh/docs/intro/install/$ curl https://baltocdn.com/helm/signing.asc | sudo apt-key add -

$ sudo apt-get install apt-transport-https --yes

$ echo "deb https://baltocdn.com/helm/stable/debian/ all main" | sudo tee /etc/apt/sources.list.d/helm-stable-debian.list

$ sudo apt-get update

$ sudo apt-get install helm

Configure WireGuard

For increased security the Kubernetes cluster is by default not directly accessible from the internet. Instead you can only reach it via a VPN – for that purpose WireGuard (WG) is used. In front of the actual Kubernetes cluster at least one gateway host is configured which exposes a SSH and WireGuard endpoint to the public. These are your access points towards the whole cluster until you expose services explicitly via the k8s mechanics.

# Create configuration directory for WG

$ mkdir ~/.wireguard/

# Create WG private key

$ (umask 0077 && wg genkey > ~/.wireguard/wg.key)

# Generate export the public key into a file

$ wg pubkey < ~/.wireguard/wg.key > ~/.wireguard/wg.pub

Configure GPG

Some credentials are stored in thepassword-manager pass,for example the private key of the WG VPN. Therefore you need to have a GPG key available.

If you already have a gpg key you can get the long ID that you need in a later step by executing the following:

$ gpg --keyid-format LONG -K test.user@mail.fake

$ gpg --batch --gen-key <<EOF

Key-Type: 1

Key-Length: 4096

Subkey-Type: 1

Subkey-Length: 4096

Name-Real: Test Name

Name-Email: test.user@mail.fake

Expire-Date: 0

Passphrase: password

EOF

Getting the OpenStack configuration

To be able to communicate with the OpenStack cloud you should fetch theopenrc file via the Dashboard of your cloud provider. Make sure you are logged in as the correct user and with the correct project. It should be possible to fetch that file from the dashboard either by using the path project/api_access/openrc/or by clicking the menu entry for fetching it.

Place the fetched file in an own directory:

# Create a folder for OpenStack openrc files

$ mkdir ~/.openstack

$ mv ~/Downloads/<filename> ~/.openstack/my-cluster-repository-openrc.sh

Prepare the cluster repository

# Create the Python virtual environment to isolate the dependencies

# This is the default location used by direnv

$ mkdir ~/.venv/

$ python3 -m venv ~/.venv/managed-k8s/

# Create project folder

$ mkdir yaook-project

$ cd yaook-project

# Clone`yaook/k8s` repository

$ git clone https://gitlab.com/yaook/k8s.git

# Create an empty directory as your cluster repository:

$ git init my-cluster-repository

# Setup your environment variables:

$ cp k8s/templates/envrc.template.sh my-cluster-repository/.envrc

Configure direnv

direnv is a simple way to configure directory specific environment variables or automatically execute scripts – so as soon as you switch in your directory with the configuration data for your setup it will set required variables like credentials and source the Python virtual environment.

For direnv to work, it needs to be hooked into your shell https://direnv.net/docs/hook.html

# edit the target file my-cluster-repository/.envrc as follows by

# adapting the corresponding lines:

wg_private_key_file="${HOME}/.wireguard/wg.key"

wg_user='<however_you_want_to_name_your_wg_user>'

TF_VAR_keypair='<name_of_the_ssh_public_key_in_your_openstack_account>'

# put that at the end of that file to load your OpenStack credentials

source_env ~/.openstack/my-cluster-repository-openrc.sh

# Change the working dir into the new cluster repository

# you should be asked if you want to unblock the .envrc

$ cd my-cluster-repository/

direnv: error /home/path/to/yaook-project/my-cluster-repository/.envrc is blocked. Run `direnv allow` to approve its content

$ direnv allow

# it should ask you for your OpenStack account password now every time

# you go into that directory

Initialize the cluster repository

# Initialize the cluster repository

$ ../k8s/actions/init.sh

$ git add .

$ git commit -am 'Init the cluster repository'

# Install requirements

$ python3 -m pip install -r managed-k8s/requirements.txt

# don't get confused by red lines, check the exit code if it was

# successful

$ echo $? # should output 0

Edit the config.toml file

As a next step we need to adjust the actual configuration for the k8s cluster so please editconfig/config.toml with an editor of your choice. For a full config reference check:

https://yaook.gitlab.io/k8s/usage/cluster-configuration.html

Search for ANCHOR: terraform_config and add the following lines behind [terraform] or adapt them:

# for testing 1 is enough, for production at least 3 are

# recommended for HA

masters = 1

workers = 1

# specify the flavor names to use for the different node types, please

# adapt to your cloud:

gateway_flavor = "XS"

master_flavors = ["M"]

worker_flavors = ["M"]

# do not distribute critical components over multiple availability

# zones for higher reliability, don't turn this off for production

enable_az_management = false

# Define the AZ names to use, NOTE: The count of the array automatically

# also determines the gateway VM count! As long as

# "enable_az_management" is false the name doesn't matter only the

# count. But adapt that to 3 real names for your cloud provider in

# production:

azs = ["AZ1"]

# Specify the Linux distribution to use. Right now we support Ubuntu

# 18.04 and 20.04 for masters and workers and Debian 10 and 11 for the

# gateways. The names need to be adapted to the image names of your

# cloud provider

default_master_image_name = "Ubuntu 20.04 LTS x64"

default_worker_image_name = "Ubuntu 20.04 LTS x64"

gateway_image_name = "Debian 11 (bullseye)"

# Specify the name of the provider network for providing floating IPs

public_network = "shared-public-IPv4"

ANCHOR: ch-k8s-lbaas_config and edit shared_secret with the output of dd if=/dev/urandom bs=16 count=1 status=none | base64

shared_secret = <e.g. "JPueUpAf5FLOe6vcwQlc4w==">

# ANCHOR: wireguard_config and add behind [wireguard]. The string xmDACa5sVBv5+nAzpzoPwDv0U00nHxvWXaAMIV466zo=comes from the file ~/.wireguard/wg.pub.

[[wireguard.peers]]

pub_key = <"xmDACa5sVBv5+nAzpzoPwDv0U00nHxvWXaAMIV466zo=">

ident = <"however_you_want_to_name_your_wg_user">

ANCHOR: passwordstore_configuration and add behind [passwordstore]

[[passwordstore.additional_users]]

ident = <your GPG key mail address, e.g. "test.user@mail.fake">

gpg_id = <yourt GPG long ID, e.g. "238F9AED92DD4C36148F45F68846E45B1F4D115F">

Spawn the cluster

$ managed-k8s/actions/apply.sh

apply.shin case you want to better understand what’s going on – simply check the script for what to execute in which order. Details about the steps can be found here: https://yaook.gitlab.io/k8s/operation/actions-references.html

Note: If you change the Cloud configuration in a destructive manner (decrease node counts, change flavors, …) after having the previous config already deployed these changes will not be applied. For that case you need to use an additional environment variable. You should not export that variable to avoid breaking things by accident.

$ MANAGED_K8S_RELEASE_THE_KRAKEN=true managed-k8s/actions/apply.sh

git add .

git commit -am 'Spin up the k8s'

Deploy NGINX

Create a directoryapps within the my-cluster-repository directory and in there create a file nginx-deployment.yaml with the following content:

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

selector:

matchLabels:

app: nginx

replicas: 1 # if you have multiple worker you can increase that for HA

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:stable

ports:

- containerPort: 80

---

kind: Service

apiVersion: v1

metadata:

name: nginx-public-ip

annotations:

service.beta.kubernetes.io/openstack-internal-load-balancer: "false"

spec:

selector:

app: nginx # the name is defined in nginx-deployment.yaml

type: LoadBalancer

ports:

- name: http

port: 80

targetPort: 80

ipFamilyPolicy: SingleStack

ipFamilies:

- IPv4

$ kubectl apply -f apps/nginx-deployment.yaml

deployment.apps/nginx-deployment created

EXTERNAL-IP column contains the IP.

$ kubectl get svc nginx-public-ip

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

nginx-public-ip LoadBalancer 10.104.145.27 185.128.117.101 80:30541/TCP 80s

git to follow the infrastructure as code approach e.g. by:

git add .

git commit -am "Deploy NGINX"

Test your web server

Simply use your browser to access the public IP or:$ curl 185.128.117.101

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

Enjoy your cluster!

To tear down your cluster, you can run:MANAGED_K8S_RELEASE_THE_KRAKEN=true managed-k8s/actions/destroy.sh