Power consumption is becoming increasingly important for information and communication technology (ICT), especially for data centers. While operators are under increasing cost pressure due to rising energy prices, the enormous consumption of resources for the required energy is also worrying. This calls politicians to prepare concrete regulations for data centre operators. The EU Directive 2012/27/EU on energy efficiency already called for measures to save energy consumption. Germany formulates this target in its 20-20-20 goals. Accordingly, primary energy consumption is to be reduced by 20 % by 2020.

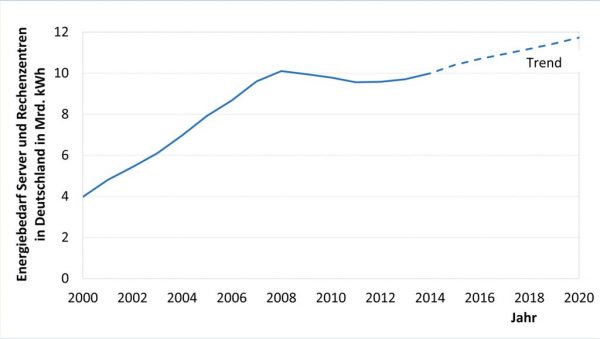

In 2014, data centres in Germany were already responsible for the consumption of around 10 billion kWh, or 1.8 % of total German electricity consumption. The sheer size is only made clear by a comparison: This corresponds to the electricity consumption of around 3 million 3-person households. An incredible amount. On a global scale, the figures are becoming even more impressive. At 416 TWh, the electricity consumption of the data centers installed worldwide significantly exceeded the UK’s electricity requirement of around 300 TWh. And the demand for electricity is growing visibly, because the number of data centers is also growing continuously due to higher demand for computing power. In 2014, the number of physical servers in Germany had already risen to 1.7 million. The following diagram illustrates that this development is far from stagnating.

Figure 1: Power requirements for servers and data centers in Germany (Bitkom, 2014)

The increase in electricity consumption is not necessarily due to a lack of willingness to innovate. In 2014 alone, 800 million euros were invested in the modernization and new construction of data centers in Germany alone to limit this development.

You can’t improve what you don’t know

An important milestone in the optimization of data centers was, in addition to technological developments in energy supply, cooling systems and waste heat utilization, in particular the systematic recording of power consumption. Only the analysis ft he actual situation made it possible to transparently evaluate measures to increase energy efficiency on the basis of energy performance indicators.

What is PUE and how can it be improved?

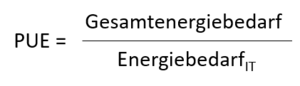

The development of an industry standard for determining the energy efficiency of data centers was promoted by The Green Grid. In 2007, the companies involved developed the key performance index of power usage effectiveness (PUE). It indicates the ratio of total energy consumption and the energy requirements of the IT components and is currently the only parameter that is used by different data centers to determine and compare the relative energy efficiency.

Ideally, all the energy is used for the IT infrastructure. In this case the PUE is 1.0, but since additional energy is always required for losses, UPS, lighting, control technology and the cooling system including the pumps, recirculation units and dry coolers, the value of 1.0 is only a theoretical target value. According to Bitkom, with a physical utilization of the available rack space of approx. 50%, a modern state-of-the-art data center should achieve a maximum PUE of 1.4 or lower.

The calculation of the PUE depends on the definition of the system limits within a data center, see Figure 2. The energy flows recorded at this point serve as the basis for determining the data center.

Figure 2 System boundaries of data centers for the calculation of the PUE (own representation)

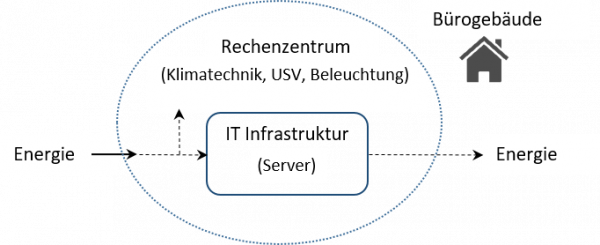

However, there is scope for interpretation when it comes to defining the IT components to be included in the calculation. For this reason, a distinction is made between PUE maturity levels, which differ in the measuring methods, measuring intervals and measuring points used, see Figure 3.

Figure 3:PUE Maturities (Bitkom, 2015)

An important criterion for the comparability of the PUE of different data centers is the consideration of the same period. The power requirement for cooling the IT infrastructure is considerably higher in summer than in winter. This should be taken into account in the calculation, which is why the collection of twelve-month averages in particular is a guarantee for meaningful PUE measured values. If a PUE is specified for an observation period of less than one year, it is referred to as interim PUE (iPUE). This is of particular advantage when determining a real-time PUE, which can be used for live monitoring.

The PUE has become the industry standard for calculating energy efficiency in the course of standardization of data centers, in particular the DIN EN 50600 series of standards. This European standard not only addresses topics such as construction, electricity and climate, but also focuses holistically on all issues relevant to data centres, including management and operation.

Air or water cooling, who is ahead in PUE?

Conventional air-cooled data centers make up a large part of the installed server systems to date. However, their biggest flaw is that cooling is often responsible for around 40 % of energy consumption due to air conditioning technology. Modern air-cooled data centers therefore achieve an average PUE of around 1.5. As an alternative concept, the use of water as a cooling medium has established itself. Water has significant physical advantages when absorbing heat compared to air, because not only is the heat capacity 3300 times higher, but the thermal conductivity is also 20 times higher than that of air. But water cooling is not just water cooling. A distinction is made between direct hot water cooling and indirect water cooling via sidecoolers. With sidecoolers, the heated air inside the servers is blown outwards by fans, analogous to air cooling. Air-water heat exchangers are then located here to cool the air. In direct hot water cooling, on the other hand, the water is conducted directly along the heat-emitting components in order to absorb the heat energy emitted. The efficiency of this cooling technology means that additional fans and air conditioning technology can be dispensed with and significant efficiency gains can be achieved.

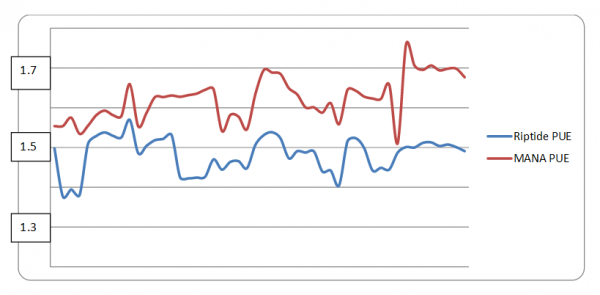

A direct comparison of both cooling systems was carried out in a study by the U.S. Department of Energy. In an existing high-performance computing (HPC) data center, the air cooling system was replaced by one with direct water cooling. Based on the Linpac benchmark, the PUE could thus be compared for the same performance. Figure 4 clearly shows that the PUE of the system with water cooling is not only significantly lower than that of the air-cooled alternative, it is also associated with significantly less fluctuations.

Figure 4: PUE comparison of HPC cooling systems with direct water and air cooling for Linpac benchmark test (based on US Department of Energy, 2014)

The world’s lowest PUE is currently 1.014. This record value was achieved by Cloud&Heat Technologies and was possible primarily due to direct hot water cooling as well as demand control and preheating of the required cooling air through an underground car park. By comparison, Google’s PUE reaches 1.14 across data centers, with Facebook reporting a PUE of 1.09 for its largest data center in Prineville, Oregon.

Innovations “outside the box”

On the way to ever lower PUEs, some companies are also trying out completely new concepts. The Internet service provider IGN from Munich has implemented a cooling system in cooperation with Rittal that uses groundwater as coolant. The cool groundwater is pumped from a well into a water circuit cooled by heat exchangers, which supplies eight redundant recirculation air conditioning systems. In combination with an optimized cold air duct, a PUE of 1.2 could be achieved. Microsoft’s research experiment “Project Natick” goes even further. In order to minimize the energy required for cooling, the low temperature of the sea is taken advantage of. A container data center was sunk in the Pacific Ocean. The cooling requirement was thus covered by the low ambient temperature. However, whether this is a model for the future remains to be seen.

A good PUE is not everything

However, an isolated view of PUE can lead to false conclusions about the actual efficiency of a data center. This is illustrated by the following example. Let’s assume that in a data center with 1,000 servers, 700 servers run at idle due to a lower overall utilization rate. To save energy, these 700 servers are switched off. Although this would result in a reduction in energy consumption, it would lead to an increase in PUE, since the denominator of the calculation formula, the power consumption of the remaining 300 servers, decreases much more than the meter, the total energy requirement. But there is also room for improvement in the remaining servers. The workload can be further increased by combining the workload on some servers through virtualization, for example with OpenStack, in order to shut down others. This saves energy and ultimately also costs.

In order to increase the informative value of energy performance indicators, the inclusion of further performance indicators in DIN EN 50600 is currently being discussed. A combination of these values should provide a more comprehensive picture of the efficiency of a data center. Energy recovery, for example, offers great potential for increasing the energy efficiency of data centers. If, for example, the heat energy emitted is fed into a heating system, energy consumption can be reduced here. In this case, however, the waste heat reduces the energy requirement at points outside the system boundaries of the data center and is therefore not included in the PUE analysis. One indicator that is more suitable for assessing a data center with regard to its energy recovery is “Energy Reuse Effectiveness” (ERE).