In a series of 3 blog posts, Confluent and Cloud&Heat explore the use of floating workloads for improving data processing efficiency and streaming data management.

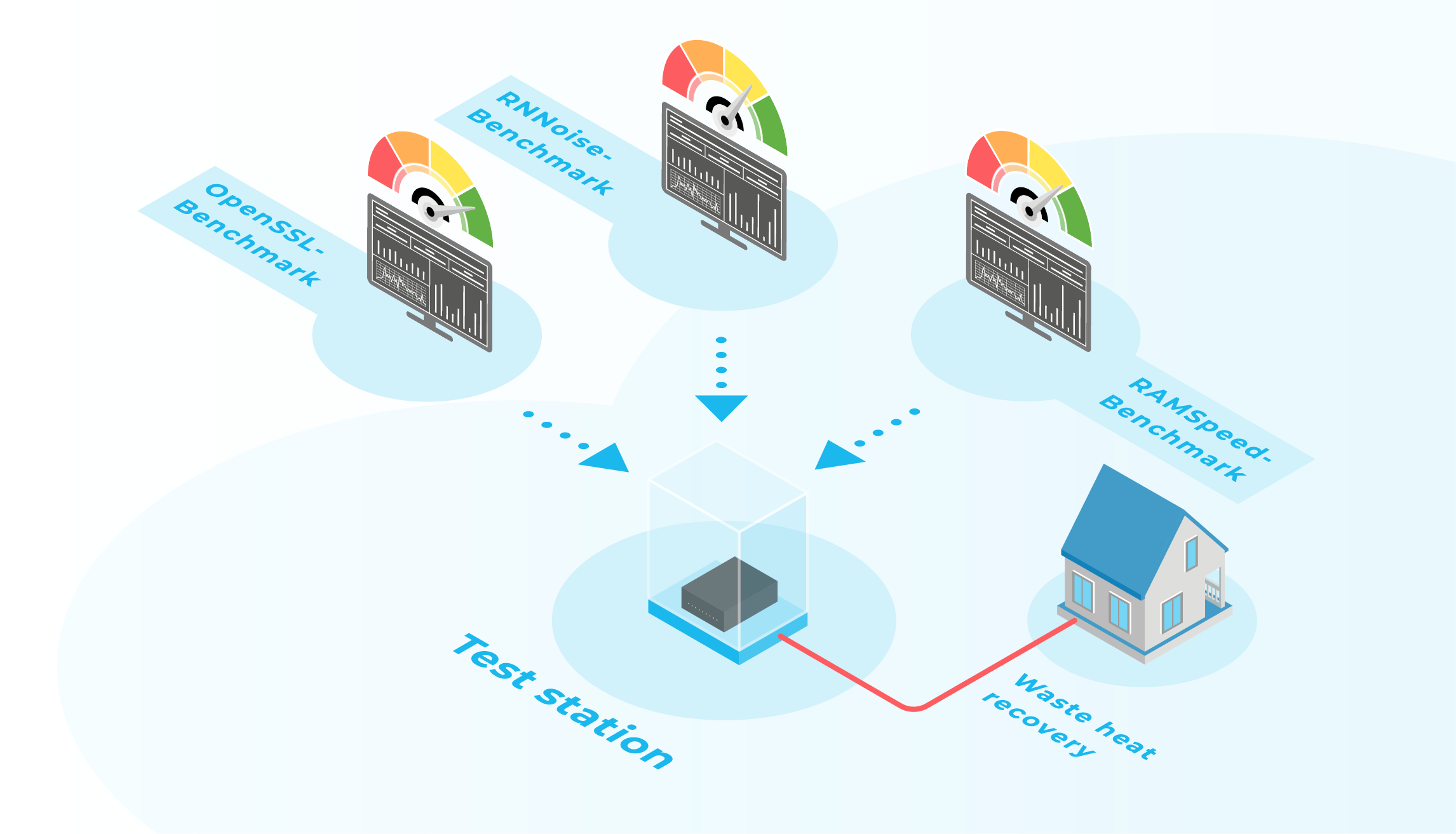

In the future of decentralized digital infrastructure, cloud applications run on a plethora of datacenters that fit their regional legal, technical, and energy requirements. To efficiently orchestrate them, optimizing the energy utilization of the distributed cloud infrastructure globally can be achieved by shifting applications between sites or delaying the execution of applications, depending on ecological factors.

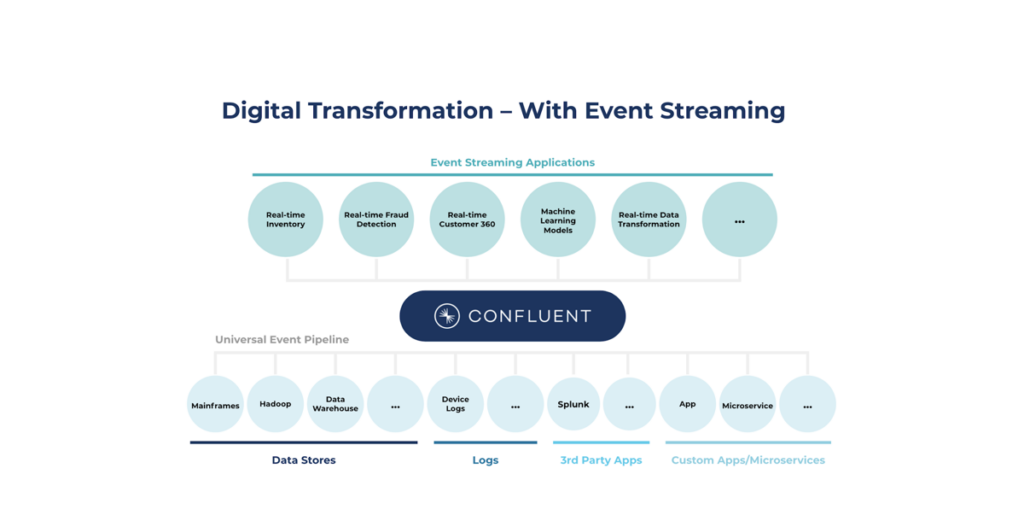

Our solution demonstrates how we combine the Confluent Platform with Krake in a two-layer data plane to manage floating workloads for improving processing efficiency:

- Confluent addresses the “data plane”—data can be ingested and consumed at any point of the distributed infrastructure without user intervention

- Krake addresses the “application plane”—applications can be deployed at any point of the distributed infrastructure without user intervention.

This accomplishes the following objectives:

- Distributed datacenters: Enable the full advantages of edge cloud platforms for cloud users, including applications which previously didn’t fit the cloud model

- Global datacenter linking: Confluent Platform allows you to create a data plane between and across datacenters, which will exist as central systems or as decentralized solutions. Such a data plane provides the integration and transportation mechanisms for global cluster integration and Cluster Linking. The result is transparent access to data streams and data assets managed on the data plane.

- Software-defined energy efficiency: Optimize the energy efficiency of the distributed cloud infrastructure through load shifting and delayed execution.

Sounds interesting ? The first part is available on the Confluent blog. It focuses on the overall motivation and objectives and introduces key concepts. Part 2 and part 3 will cover the technical setup used during our experiments and present the results.

Stay tuned !

About Confluent

Confluent is founded by the original creators of Apache Kafka®. The California-based company provides the industry’s only enterprise-ready Event Streaming Platform, driving a new paradigm for application and data infrastructure. With Confluent Platform you can leverage data as a continually updating stream of events rather than as discrete snapshots. Over 60% of the Fortune 100 leverage event streaming – and the majority of those leverage Confluent Platform. Confluent provides a single platform for real-time and historical events, enabling you to build an entirely new category of event-driven applications and gain a universal event pipeline.Confluent Platform makes it easy to build real-time data pipelines and streaming applications by integrating data from multiple sources and locations into a single, central Event Streaming Platform for your company. Confluent Platform lets you focus on how to derive business value from your data rather than worrying about the underlying mechanics such as how data is being transported or massaged between various systems. Specifically, Confluent Platform simplifies connecting data sources to Kafka, building applications with Kafka, as well as securing, monitoring, and managing your Kafka infrastructure.