Pushing the boundary conditions of data centers facilitates innovative circular economy approaches

Authors: Dr. Andreas Hantsch, Leonie Banzer, Conrad Wächter, Anne Weisemann, Dr. Jens Struckmeier1

Date: November 30th, 2021

About

This blog post reproduces a paper that has been honored with the Best contribution in the sustainable tech track and best paper award of the Open Compute Project Future Technology Symposium 2021 held in San José between November, 8th-10th2. In addition, Leonie Banzer was honoured for her outstanding presentation performance on stage presenting our paper.

Abstract

Data are the new fuel. We call it ‘Energy to Data, Data to Heat’. Placing data centers at locations where both the demands for information technology and heat coincide with server virtualization and load-optimization allows circular economy approaches. We investigate the effect of direct hot-liquid cooling characteristics on the energy reusage factor. Depending upon the location, 85 % to slightly less than 100 % values are achievable. Optimized servers and components significantly contribute to the objective.

Introduction

Data centers (DC) consume a significant amount of energy. The global annual electricity demand has been approx. 200 TWh in 2018 [1]. Unfortunately, all the electrical energy supplied to information technology (IT) devices is eventually converted into heat, usually dissipated to the environment, and wasted. However, rising concerns about the future led to the Paris Agreement [2], and various measures have been subsequently defined to reduce energy consumption and its corresponding carbon dioxide emissions. Among those, the reuse of waste in conjunction with renewable energies lead to very promising circular economy approaches. There is a variety of measures available to cool a DC [3]. Mechanical cooling is widespread, extracting the server heat via room air-conditions systems. It is usually accompanied by free-cooling bypassing the refrigeration system or direct ambient cooling through cold ambient air or water. Direct hot-liquid cooling (DHLC), where flow-through cooling kits are mounted directly on the chips, allows for the highest temperatures and, thus, energy efficiency because almost all the heat is captured in the liquid [3]. In order to achieve the highest efficiency, we propose to place edge DC at locations where both IT demand and heat recovery coincide. Virtualization of servers and a smart load-balancing via frameworks [4] support these efforts. Moreover, high heat capture rates of DHLC and high temperatures are beneficial to include DC in circular economy concepts [5], such as heating, thermal cooling, seawater desalination, electricity generation, and the like. From the authors’ experience, these can be achieved only if manufacturers of chips and servers optimize their products for DHLC rather than air-cooling. We show the influence of these parameters on the heat recovery potential of DC.

Simulations

An algebraic model is employed to carry out annual simulations with hourly time steps. This allows to consider the influences of climatic data on heat transfer through the envelope and on the heat rejection performance, and variable IT loads of various server types to be considered. For each of the time steps, the following equations are solved [3]:

EIT,i = EComputing,i + EStorage,i + ENetwork,i, (1)

EPeriphery,i = EUPS,i + Eel,losses,i + ERAC,i + EDHLC,i + EMON,i + EICA,i + EHR,i + EHRC,i, (2)

Qrecoverable,i = QDHLC,i + QRAC2DHLC,i – QHR,i – Qint.losses,i. (3)

Herein, EComputing,i, EStorage,i, and ENetwork,i are the energies required for computing, storage, and network devices, respectively, EUPS,i, Eel,losses,i, ERAC,i, EDHLC,i, EMON,i, EICA,i, EHR,i, and EHRC,i are the energies for uninterruptable power supply, electrical losses, room air-conditioning, DHLC, monitoring, instrumentation, control and automation, heat rejection, and heat recovery, respectively, and QDHLC,i, QRAC2DHLC,i, QFC,i, and Qint.losses,i are the heats captured in DHLC, supplied from room air-conditioning to the hot-liquid circuit, rejected into the ambient, and internal losses, respectively.

Summation over all time steps i (i.e., 8760 h/a) provides the annual energies for IT EIT,an, periphery EPeriphery,an, recoverable heat Qrecoverable,an. Based on these values the Energy Reusage Factor can be evaluated [3]:

ERF = Qrecoverable,an / (EIT,an + EPeriphery,an). (4)

Computations are carried out for a one-mega-watt DC with an average part-load of 50 %. Free-cooling of the air is considered if the ambient temperature is 9 K lower than the IT room temperature (here: 22 °C [3]).

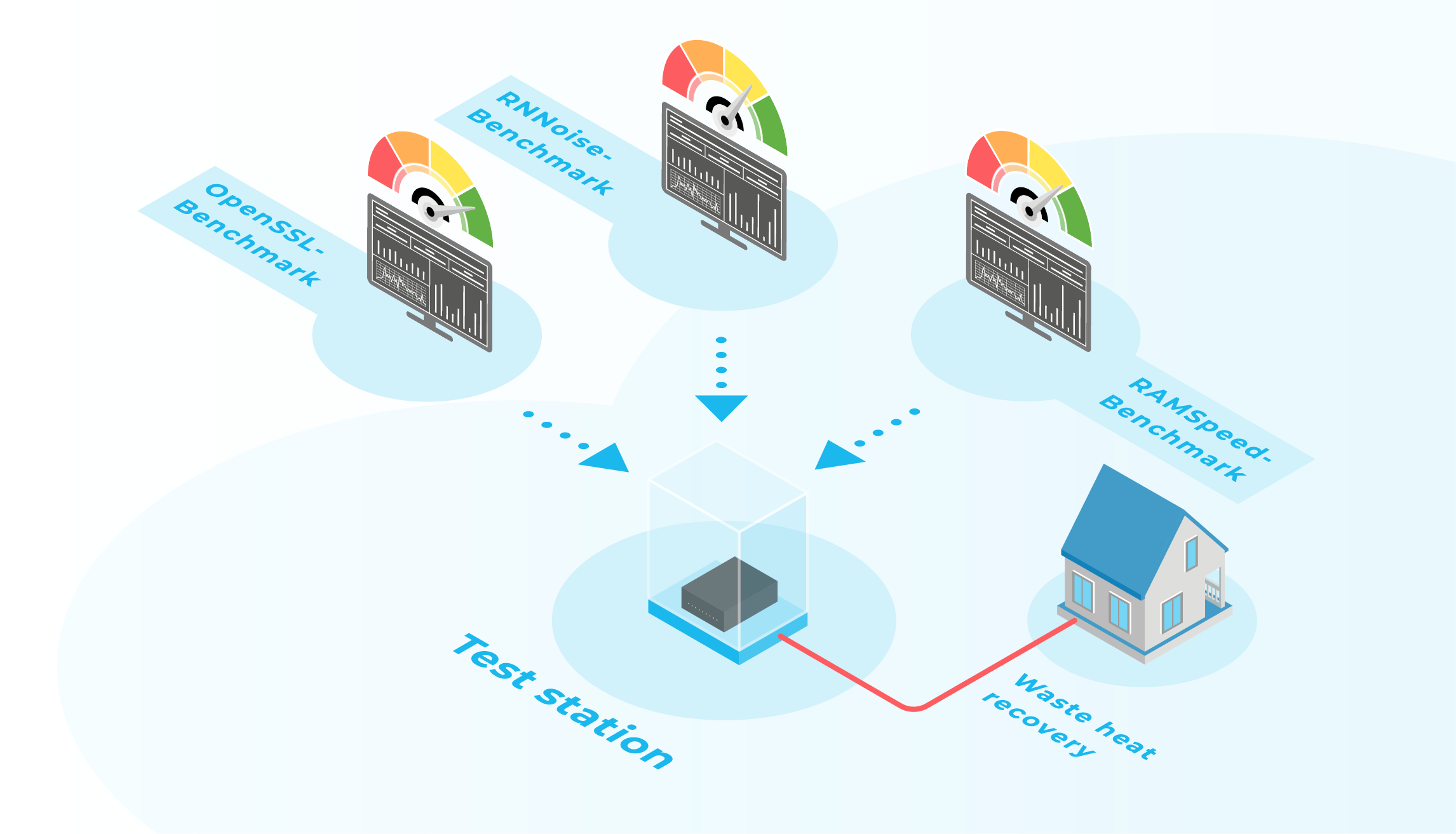

Experiments

Experiments are carried out to study the interrelation of the temperature of central (CPU) and graphical (GPU) processing units on the outlet temperature of DHLC servers. For this purpose, the servers are placed in an air-conditioned and insulated chamber, with a temperature-controlled liquid circuit providing the DHLC capacity. There are temperatures measured at various places, such as of the air inside and outside the chamber, the inlet and outlet temperature of the coolant, moreover, the humidity of the air inside the chamber, the flow rate of the coolant, the pressure drop over the server, and the powers for conditioning air and coolant. In addition, the temperature readings of the CPU and GPU are recorded. The tests were carried out with high-demanding LINPACK benchmarks after thermal steady-state was achieved. Each of the measurements provided data of at least 30 recordings, allowing to carry out statistical evaluation [^6]. Consequently, the uncertainty of temperature reading of coolant is approx. ± 1 K and uncertainty of the chip temperatures was assumed to be ± 1 K. We have tested various DHLC servers of different vendors and different levels of DHLC that are anonymized here.

Results and Discussion

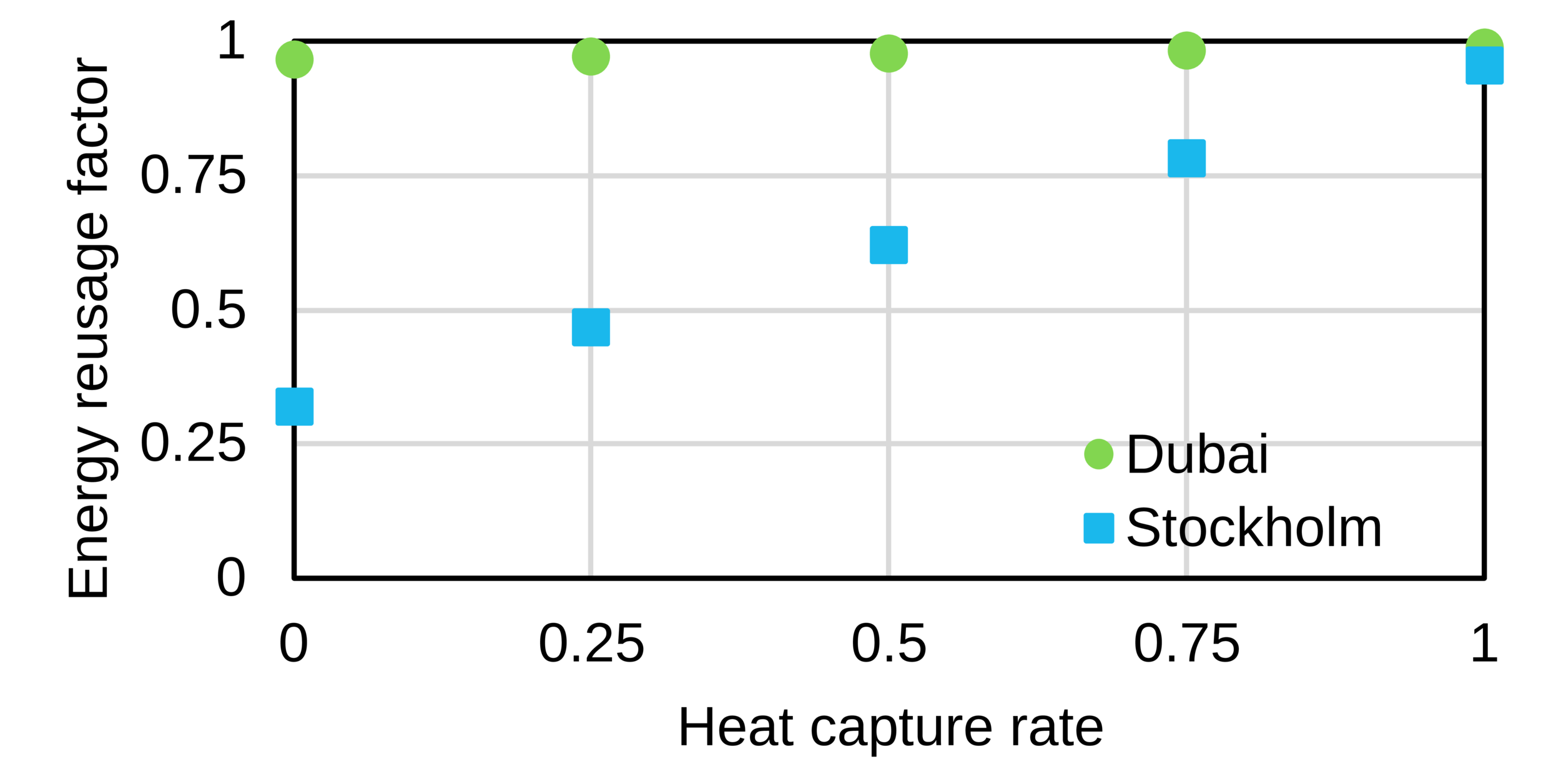

The influence of the heat capture rate, the percentage of server heat captured in the liquid, on the energy reusage factor of a DHLC DC is illustrated in Fig. 1. It can be clearly observed that the effect is relatively small for a hot climate, whereas for a cold climate, higher heat capture rates yield significant higher energy reusage factors. The reason is that with increasing heat capture rates, more heat is captured in the liquid and, thus, not in the air. Since free-cooling to the environment is the most energy-efficient and preferred air-cooling mode, a lot of heat is dissipated to the ambient air. In contrast, in hot climates, where free-cooling is not available for a larger percentage of the year, the heat in the air has to be treated by air-conditioning. The Cloud&Heat system utilizes the heat of the condenser side of the cooling unit and provides it for recovery.

Fig. 1: Energy Reusage Factor depending upon Heat Capture Rate for cold (Stockholm, Sweden) and hot climate (Dubai, United Arab Emirates).

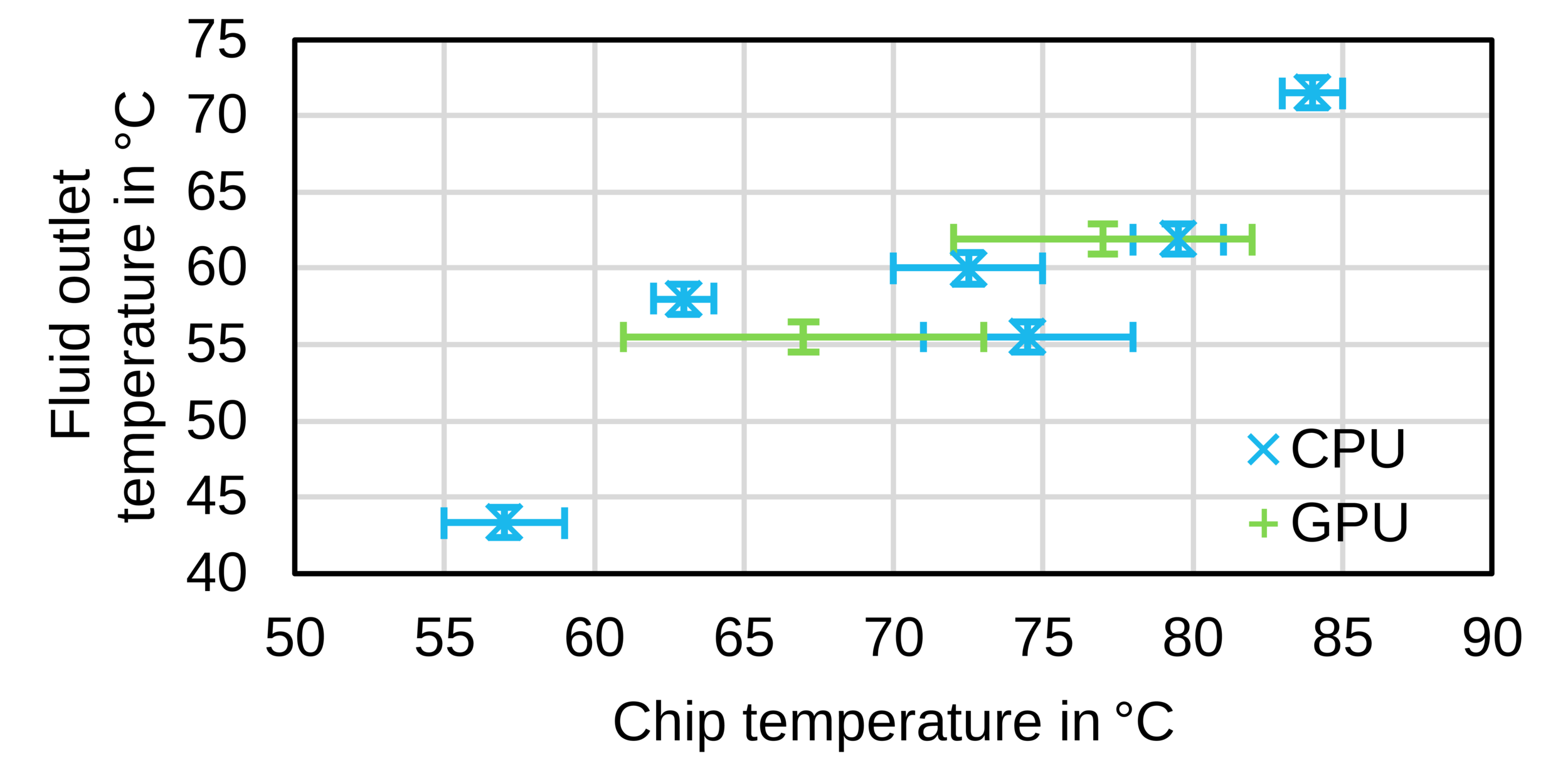

Figure 2 provides insight into the relation between coolant outlet temperature and chip temperature. Outlet temperatures between 43 and 72 °C could be recorded. This is, however, only a trend. The data show a high degree of dispersion. Notably, in multi-CPU and multi-GPU systems, the chip temperatures are not evenly distributed in all systems. Therefore, the outlet temperature, the calorific mean of all the parallel sub-streams, is relatively small. A distinct server design for DHLC would be beneficial to achieve the highest coolant temperatures at adequate chip temperatures.

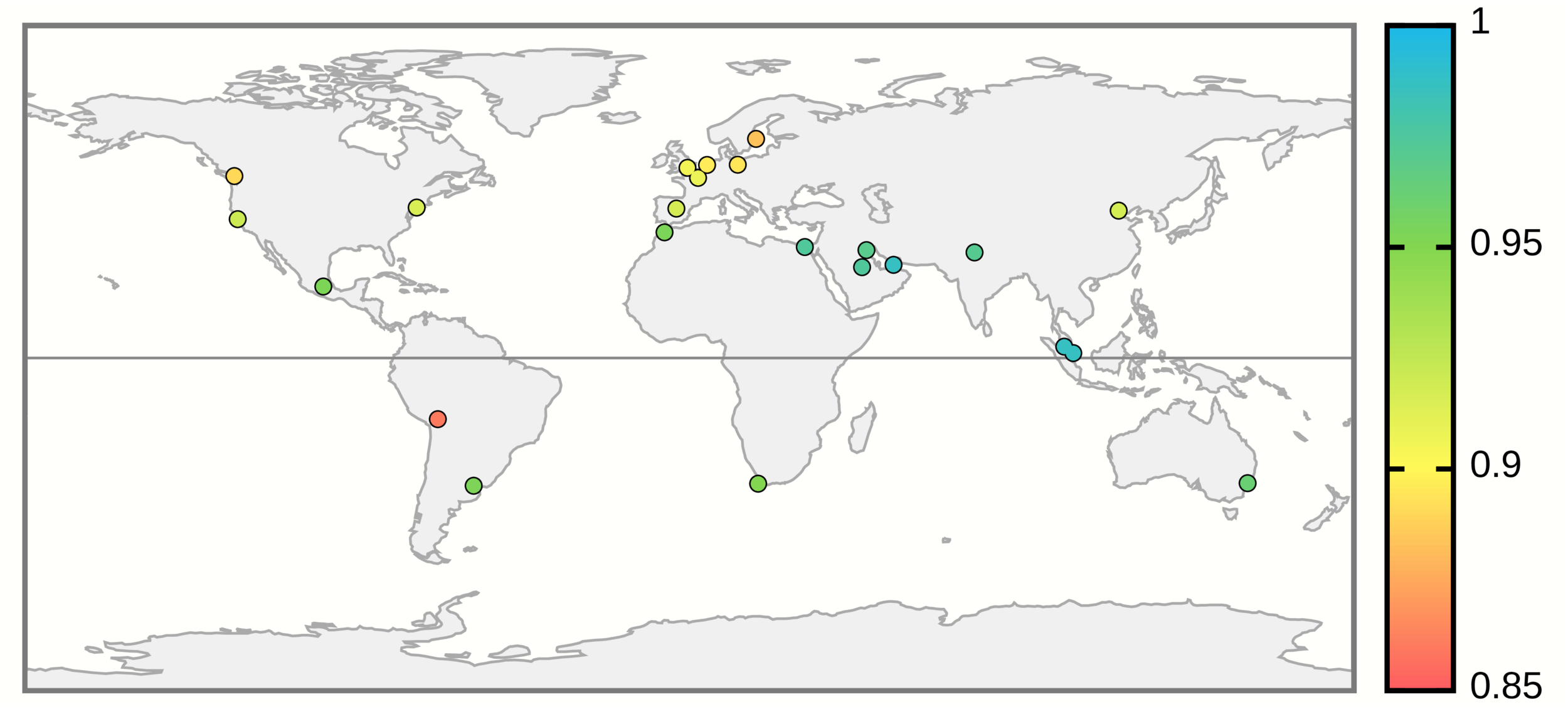

The influence of both effects, highest heat capture rates (0.9) and coolant outlet temperature (up to 60 °C), is visualized for various places worldwide in Fig. 3. It can be learned that the ERF is between 85 % and almost 100 %, depending upon the location. It shall be noted here that thermal losses from the DC to the heat recovery depend very much upon the implementation and are therefore not considered here.

Summary and outlook

We carried out annual simulations and experiments to show the positive effect of direct hot-liquid cooling (DHLC) in conjunction with server virtualization and load-optimization. It was highlighted that high heat capture rates in the server yield high energy reuse factors of 85 % to almost 100 %, depending upon the location. The maximum coolant outlet temperature depends strongly upon the server system, for which temperatures of 43 to 72 °C were recorded.

In order to facilitate circular economy approaches and heat utilization, both chip manufacturers and server vendors are asked to provide the infrastructure that is optimized for DHLC. The potential to be gained is enormous: When we use the waste heat of the DC to produce potable water from seawater, a 40 MW DC installation would be sufficient to supply all residents of the United Arab Emirates [7] with the annual bottled water consumption.

Acknowledgements

This work has been supported by the Federal Ministry of Education and Research of the Federal Republic of Germany through the contract 01LY1916C.

References

[1] Masanet, E.; Shehabi, A.; Lei, N.; Smith, S. & Koomey, J. Recalibrating global data center energy-use estimates, Science, 2020, vol. 367, pp. 984-986

[2] United Nations Framework Convention on Climate Change (ed.) Adoption of the Paris Agreement, number FCCC/CP/2015/L.9/Rev.1

[3] ASHRAE TC 9.9, Thermal Guidelines for Data Processing Environments, 4th ed., Vol 1. Atlanta, GA: ASHRAE, 2015

[4] https://gitlab.com/rak-n-rok/krake, downloaded on 23rd of July 2021

[5] Hantsch, A. Energieeffizienz durch softwaregeführtes Lastmanagement in verteilten Rechenzentren, Bitkom AK Software Engineering und AK Software Architektur – Software meets Sustainability, 16th June 2020

[6] Bronstein, I. N.; Semendjajew, K. A.; Musiol, G. & Mühlig, H. Taschenbuch der Mathematik, Frankfurt: Harri Deutsch, 2001

[7] Dakkak, A. Water Management in UAE, 2020. https://www.ecomena.org/water-management-uae/, downloaded on 23rd of July 2021

- Cloud&Heat Technologies GmbH, Königsbrücker Straße 96, 01099 Dresden, Germany↩

- https://www.opencompute.org/summit/ocp-future-technologies-symposium/upcoming-events↩