This blog post will be a full tutorial about the usage of the "era" target of device mapper, also known as "dm-era". It provides a step by step walkthrough for setting up the device mapper mappings and parsing the resulting metadata.

Content

Creating a playground VM using Vagrant

The "era" target of device mapper can be quite powerful when looking for live tracking of changed blocks on an arbitrary block device, no matter if physical or logical. Since it is a low-level device mapper target it supports raw/physical block devices, device mapper targets as well as LVM volumes to keep track of.

The dm-era target has been integral part of the Linux kernel since version 3.15. So it has been around for quite a while already, however, documentation is still astonishingly sparse. Essentially, I could only come up with three results while searching the web: the official kernel docs [1], a blog post by Rackcorp [2], and a Gentoo wiki entry [3].

Since the kernel docs and the Gentoo wiki entry only provide the most basic information without full usage examples, the Rackspace blog entry is a more elaborated guide - but not without its flaws.

The essential part about working with device mapper is to understand that the basic size unit used is sectors. Whenever specifying a size for mappings, keep in mind it's at sector level. Usually a sector is defined as 512 bytes.

Device Mapper Mappings

The basic modus operandi of device mapper is to apply mappings to block devices. That means you will be building layers above block devices, creating new block devices in the process. This is achieved by "targets", which are modules for device mapper that provide a particular mapping functionality.

The most basic target is "linear", which means the resulting block device will be a mapping to one or more linear sections of one or more other block devices. This way it's possible to logically merge two block devices into one or to split one up into several (like logical partitions). Please refer to one of the plenty documentations or tutorials about the "linear" device mapper target to learn more.

In this guide we will be focussing on the cooperation of "linear" and "era" device mapper targets to track changed blocks on a block device in realtime.

The Era Target

The "era" target behaves similar to the "linear" target but additionally it writes metadata to a separate block device which is used to keep track of changed blocks on the origin block device. To be precise, the "era" target keeps track of changed blocks within a specific period of time, called an era. This era is a monotonically increasing counter and each block has an era associated to it, where it was last updated in. The era can be increased by command and is also automatically increased when grabbing a metadata snapshot to inspect changed blocks. This allows tracking changed blocks between those snapshots and can be used for a variety of use cases, e.g. incremental backup or replication strategies.

Other than that, the "era" target does an identity mapping to the underlying origin block device, normally chosen the same size as its origin.

Creating a playground VM using Vagrant

For this tutorial, we will be setting up a virtual machine (VM) with 2 additional drives using VirtualBox. We will be using the VM and the drives to create a sample dm-era setup, so you can easily recreate an identical setup and follow the instructions step-by-step. To make things quick and easy regarding the VM setup, we will be using Vagrant to automatically set this up. Place the following file named "Vagrantfile" into a (preferably empty) folder of your choice:

extdisk1 = './extdisk1.vdi'

extdisk2 = './extdisk2.vdi'

Vagrant.configure("2") do |config|

config.vm.define "alice" do |alice|

alice.vm.provider "virtualbox" do |vbox|

vbox.name = "dm-era-testvm"

unless File.exist?(extdisk1)

vbox.customize ['createhd', '--filename', extdisk1, '--size', 256]

vbox.customize ['storageattach', :id, '--storagectl', 'SATA Controller', '--port', 1, '--device', 0, '--type', 'hdd', '--medium', extdisk1]

end

unless File.exist?(extdisk2)

vbox.customize ['createhd', '--filename', extdisk2, '--size', 256]

vbox.customize ['storageattach', :id, '--storagectl', 'SATA Controller', '--port', 2, '--device', 0, '--type', 'hdd', '--medium', extdisk2]

end

end

alice.vm.box = "bento/ubuntu-16.04"

alice.vm.hostname = "alice"] end

end

end

This Vagrantfile will download the "bento/ubuntu-16.04" image from the Vagrant repositories, which is a pre-configured Ubuntu 16.04 minimal VM. Additionally, it will create two 256 MB virtual disk files and attach them to the VM.

After saving the file, start up the VM from command line in the same folder:

vagrant up

This will completely setup and start the VM. After its completion, you can SSH into the new VM:

vagrant ssh alice

(You may omit stating the machine name, if your Vagrantfile contains only one VM)

Now verify that the additional drives exist:

vagrant@alice:~$ lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

...

sdb 8:16 0 256M 0 disk

sdc 8:32 0 256M 0 disk

It should list two additional drives sized 256M each. Now we've got our playground ready, let's start setting up dm-era!

Device Mapper setup

In contrast to Rackcorp's tutorial [2], we will be using only device mapper in this guide, no LVM. Keep in mind, that you can use the dm-era mapping on LVM volumes or simply any block device just fine as well. LVM is simply using device mapper in the background anyway. All following instructions and examples are based on the sample VM setup as described above.

The resulting setup we will try to achieve will look as follows:

The two physical drives (sdb and sdc) each fulfil different purposes: sdb will only be used for holding the era metadata (information about changed blocks), whereas sdc will be the data device (origin), which we want to track the changed blocks of. Holding both the metadata and the origin on the same physical drive[4] is not supported [4].

The era device - simply called "era" - will be layered ontop of the origin drive (sdc), similar to a linear mapping. Further, we will logically partition sdb by applying a linear mapping called "era-meta" of a fixed size to it, required for the metadata. Since device mapper can't read the metadata directly from it, a second linear mapping to the metadata is required for metadata snapshots and read operations. We will create this as "era-access" in the same size as the metadata mapping ontop of the "era-meta" device.

Before beginning to set this up, we have to determine the size required by the metadata. And this is a tricky topic.

Determining Metadata size

(You can skip this chapter[5] if you are not interested in how I determined the estimated formula for metadata size requirement)

Unfortunately, neither the official docs nor the dm tools themselves tell you anything about the size that will be required by the metadata device for correct function of the era device.

The blog entry by Rackcorp [2] simply states that dividing the origin device's size by 1000 is sufficient.

However, that is a very rough estimate. Let's say you have a 1TB large drive you want to track blocks of using dm-era. With this formula you'd estimate to reserve 1GB on another drive solely for the metadata. But actually my internal tests showed a far less space requirement than this. By several orders of magnitudes.

So, I wasn't satisfied with this estimate pulled out of thin air, without any indication of what this assumption is actually based on. To get a little closer to reality you are basically left with two choices: For one you could dig into the kernel's source code related to dm-era and try to put up a formula based on the actual code - on the other hand you could simply try to estimate a formula based on a series of measurements done on differently sized era devices.

Since the first option requires a kernel specialist or at least someone familiar with the workings of device mapper and a sufficient amount of time on their hands, I was only left with the second option.

Estimating metadata size using empirical trials

I did the tests within an Ubuntu 16.04 VM and two virtual hard drives, which will be referred to as sdb and sdc. The sdb drive is a small 256M sized drive that acted as a containment for the metadata and another up to 16TB sized sdc drive that acted as an origin for the era device.

Now I set up a dm-era setup which uses a fixed amount of sdc as the origin and tried to find the minimum required metadata size on sdb. For each sdc size I slowly increased the size of the linear mapping ontop of sdb by 4k steps, trying to execute the following steps each time:

- set up a linear mapping on sdb using the current proposed metadata size

- set up a dm-era mapping

- use fixed size linear mapping on sdc as origin

- use the linear mapping on sdb as metadata device target

- try taking and dropping a metadata snapshot subsequently 10 times

If the above mentioned procedure fails at any step, the metadata size is insufficient, leading to errors. However, if all of the steps succeed without issues, we deem the chosen metadata size as being sufficient for the current sdc mapping size. This is based on the assumption that taking more than 10 metadata snapshots (eras) or actually writing data to the origin device does not influence the required size any further, i.e. the size requirement does not grow over time.

Testing this with varying sizes of sdc mappings (up to 16TB) led to the following values getting recorded:

| data size in MB |

required metadata size | |

| for 4k (sectors) granularity | for 8k (sectors) granularity | |

| 4M | 64 | 64 |

| 8M | 64 | 64 |

| 16M | 64 | 64 |

| 32M | 64 | 64 |

| 64M | 64 | 64 |

| 128M | 64 | 64 |

| 256M | 64 | 64 |

| 512M | 64 | 64 |

| 1G | 64 | 64 |

| 2G | 68 | 64 |

| 4G | 72 | 68 |

| 8G | 80 | 72 |

| 16G | 96 | 80 |

| 32G | 128 | 96 |

| 64G | 204 | 128 |

| 128G | 344 | 204 |

| 256G | 628 | 348 |

| 512G | 1336 | 628 |

| 1T | 2768 | 1320 |

| 2T | 5568 | 2764 |

| 4T | 11248 | 5560 |

| 8T | 22308 | 11248 |

| 16T | 42388 | 21168 |

Although this looks like an exponential correlation at first glance, take notice that the x axis is not on linear scale but a power of 2 due to the sizes tested. When putting this into a chart with linear scaling on both axes, an almost perfect linear correlation becomes visible:

Here I observed required metadata size for varying origin device size while keeping the granularity (dm-era setup command block size) constant.

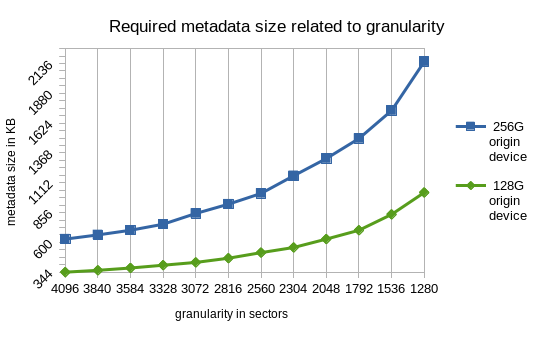

Besides the origin device size, the granularity is the second influence to the metadata size requirement. That's why I also had a closer look to varying granularity in a second measurement series. Here I varied the granularity while keeping the origin device size constant:

The graph shows that the relation is not exactly linear which makes it harder to generate a one-size-fits-all formula for the calculation for the metadata size as any approximation towards the origin device size is additionally influenced by the chosen granularity in a non-linear fashion.

This is the reason why I decided to stick to 4k (sectors) granularity while approximating the formula. Using the linear regression framework of the math framework R and our measured values in KB, the following formula was generated:

metadata_size = 2.49E-6 * origin_size + 115.4(all values in KB)

To leave enough space for deviation and to streamline the values to multiples of 4k, I adjusted this to:

metadata_size = round( (2.5E-6 * origin_size + 128) / 4 ) * 4(all values in KB)

Setting up dm-era

The following explanations will always refer to the setup overview illustration from above.

"era-meta" - the metadata target

First we will setup a part of sdb to function as a storage for the metadata created by dm-era where it stores information about the eras and blocks. We only need a fraction of the 256 MB that sdb offers. According to our formula explained above, we only require 128 KB for the metadata. To logically partition sdb so that the remaining space may be used otherwise, we create a linear mapping for the first 128 KB. As mentioned before, device mapper works on sector level, thus we need to calculate the amount of 512 byte sectors needed for 128 KB:

sectors = 128 * 1024 / 512 = 256

Now we can create a linear mapping (run as root):

dmsetup create era-meta --table "0 256 linear /dev/sdb 0"

This creates a new logical block device called "era-meta" whose sectors/blocks ranging from 0 to 256 are mapped to sdb in a linear fashion. The last digit (zero in this case) is the offset of the mapping on sdb. If you would create several metadata targets on sdb, you will have to adjust the offset to the sum of any preceeding mappings so that they don't overlap on sdb.

We can now verify our 128 KB sized linear mapping called "era-meta":

vagrant@alice:~$ lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

...

sdb 8:16 0 256M 0 disk

`-era-meta 252:2 0 128K 0 dm

sdc 8:32 0 256M 0 diskIt will show up and be accessible on /dev/mapper/era-meta.

"era" - the data holding target

Since our metadata target is now prepared, we can proceed by creating our actual dm-era target mapping. The era target has to be the same size as the underlying data block device. We need its size in 512 byte sectors again, which we can determine via the following command:

blockdev --getsz /dev/sdFor our 256 MB sized sdc device this yields 524288 sectors (physical blocks). We are now able to set up our era device (run as root):

dmsetup create era --table "0 524288 era /dev/mapper/era-meta /dev/sdc 4096"Here we create new logical block device called "era" that contains a direct linear mapping to sdc for the first 524288 blocks. Additionally, our "era-meta" mapping is used as a target for metadata. The 4096 is the tracking granularity of the era device in sectors, also called "block size" in the official docs. Essentially it is the resolution of the block change tracking mechanism. If anything changes within a block of 4096 sectors, that block is marked as changed.

We can again verify the creation of this mapping:

vagrant@alice:~$ lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

...

sdb 8:16 0 256M 0 disk

`-era-meta 252:2 0 128K 0 dm

`-era 252:3 0 256M 0 dm

sdc 8:32 0 256M 0 disk

`-era 252:3 0 256M 0 dmWhat may look irritating at first is that the 256 MB "era" device is now listed both ontop of sdc and "era-meta". This is no error, it is perfectly normal and just how dm-era works. The "era" device shown ontop of "era-meta" is simply an indicator that the metadata is stored there. The size of this metadata area is not adjusted by the dm-era module, which is why we needed to determine it manually beforehand and provide a sufficiently sized partition; dm-era simply writes there and errors out when the device is full.

"era-access" - metadata access layer

Due to the nature of the dm-era target, we can't directly access the live metadata on "era-meta" but need to put another identically sized mapping ontop of it, which we will simply call "era-access". We use the same linear mapping method and metadata size as before (run as root):

dmsetup create era-access --table "0 256 linear /dev/mapper/era-meta 0" We have now reached our final setup that represents the initial illustration:

vagrant@alice:~$ lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

...

sdb 8:16 0 256M 0 disk

`-era-meta 252:2 0 128K 0 dm

|-`-era 252:3 0 256M 0 dm

`-era-access 252:4 0 128K 0 dm

sdc 8:32 0 256M 0 disk

`-era 252:3 0 256M 0 dmComparison to Rackcorp's guide

This is also where Rackcorp's blog entry [2] contains unintended pitfalls/mistakes. First, they presumably create the era device with the size of the metadata device not the origin device because they most likely copied/pasted the wrong variable calculation above it.

Second, they specify 1048576 as the block size parameter of dm-era and declare it as a 1 MB granularity, which seems wrong since those are still 512 byte sectors and would actually result in a 512 MB granularity. Remember that everything device mapper handles is specified in physical (512 byte) sectors!

Using the era device

Relationship between era device and origin device

What many documentations or guides fail to mention is the relation between the dm-era device and the origin device. In short terms, simply use the created era device instead of the origin device. Simple as that. The era device is a plain mapping ontop of the origin device. We can simply verify that by using the above mentioned setup.

Here we simply look at the start of sdc on byte level:

vagrant@alice:~$ head /dev/sdc | od -x

0000000 0000 0000 0000 0000 0000 0000 0000 0000Everything is zero/empty as our virtual hard drive has just been created and is not formatted yet.

Now we try to format sdc with ext4:

vagrant@alice:~$ mkfs.ext4 /dev/sdc

mke2fs 1.42.13 (17-May-2015)

/dev/sdc is apparently in use by the system; will not make a filesystem here!This doesn't work since the usage of dm-era makes the underlying block device read-only. This is perfectly normal and required for dm-era to work correctly.

Instead we now format the era device:

vagrant@alice:~$ mkfs.ext4 /dev/mapper/era

[...]

Writing superblocks and filesystem accounting information: doneThis time around it works.

To prove this actually ended up on sdc physically, we compare both on byte level:

vagrant@alice:~$ head /dev/sdc | od -x | head

0000000 0000 0000 0000 0000 0000 0000 0000 0000

*

1440002000 0000 0001 0000 0004 3333 0000 b7a1 0003

1440002020 fff5 0000 0001 0000 0000 0000 0000

1440002040 2000 0000 2000 0000 0800 0000 0000 0000

1440002060 8167 5807 0000 ffff ef53 0000 0001 0000

1440002100 8167 5807 0000 0000 0000 0000 0001 0000

1440002120 0000 0000 000b 0000 0080 0019 003c 0000

1440002140 0242 0000 007b 0000 3677 e0fa 0ec4 8948

1440002160 d49a 947e 501a 3dab 0000 0000 0000 0000

vagrant@alice:~$ head /dev/mapper/era | od -x | head

0000000 0000 0000 0000 0000 0000 0000 0000 0000

*

0002000 0000 0001 0000 0004 3333 0000 b7a1 0003

0002020 fff5 0000 0001 0000 0000 0000 0000 0000

0002040 2000 0000 2000 0000 0800 0000 0000 0000

0002060 8167 5807 0000 ffff ef53 0001 0001 0000

0002100 8167 5807 0000 0000 0000 0000 0001 0000

0002120 0000 000b 0000 0080 0000 003c 0000

0002140 0242 0000 007b 0000 3677 e0fa 0ec4 8948

0002160 d49a 947e 501a 3dab 0000 0000 0000 0000Both show the same content. The era device is basically nothing but a linear mapping to the underlying block device but additionally keeps track of changed blocks as a bonus feature.

In conclusion, we can handle the era device as just another block device, e.g. mount it:

sudo mkdir /media/sdc-data

sudo mount /dev/mapper/era /media/sdc-dataNow that we clarified the relation between the era device and its origin device, it is time to look at the actual interesting feature: the metadata and retrieval of changed blocks.

Using the dm-era metadata

Fortunately, device mapper already provides you with tools for handling the metadata device, so you don't have to poke around on byte level there. On Debian-based systems (e.g. Ubuntu) they are most likely part of a package called thin-provisioning-tools, which you need to install manually first.

In particular we're interested in two tools: era_dump and era_invalidate. Both allow you to examine the current metadata in one way or another:

- era_dump will provide you with an XML-formatted list of blocks of the origin device and the corresponding era which they were last updated at; additionally it informs you about the current era and chosen granularity

- era_invalidate takes an era number as parameter and provides you with an XML-formatted list of all blocks that have been updated since the given era

To make use of aformentioned commands, you have to take a snapshot of the metadata. Luckily, this is already part of the dm-era module and device mapper provides predefined dmsetup messages for this:

Taking and dropping a snapshot (needs to be run as root!):

dmsetup message era 0 take_metadata_snapdmsetup message era 0 drop_metadata_snapThe second argument to dmsetup (era in this case) is the name of the era block device as created before. The third argument is always zero for those actions. Whenever you are about to use one of the aformentioned commands to examine the metadata, take a snapshot and drop it as soon as you have parsed the output of the command.

Era metadata handling example

As basis for our following usage example we take the setup which we created before up to the point where we have already formatted our era device with ext4. For reference, with our exemplary Vagrantfile shown at the beginning, this includes the following sequence of commands:

vagrant up

vagrant ssh

# become root

sudo -i

apt-get install -y thin-provisioning-tools

dmsetup create era-meta --table "0 256 linear /dev/sdb 0"

dmsetup create era --table "0 524288 era /dev/mapper/era-meta /dev/sdc 4096"

dmsetup create era-access --table "0 256 linear /dev/mapper/era-meta 0"

mkfs.ext4 /dev/mapper/eraFirst let's take a snapshot of the metadata:

dmsetup message era 0 take_metadata_snapNow let's dump our metadata:

root@alice:~# era_dump /dev/mapper/era-access

...

...Here we get a list of 128 blocks where each block is 4096 sectors in size (granularity), thus 128 * 4096 * 512 bytes = 256 MB in total, which is exactly the size of our origin device. The important part of the output is the era_array that maps the blocks to the era in which they were last updated in. Unfortunately no information on the writeset was available and Rackcorp [2] didn't have a clue what it stands for either. I will discuss that in a bit.

Also we see that we are currently at era 2 (era 1 seems to be the initial one). Each time you take a snapshot, you most likely end up increasing the era counter in the process. However, the official docs [1] state that this isn't guaranteed, so you should always check out the current_era value yourself.

Another method to examine the current era count is to parse the status output of the device mapper target:

root@alice:~# dmsetup status era

0 524288 era 8 12/32 2 9The second to last digit of this output is the current era. The main difference of the status command is, that you can use it without taking a snapshot.

In the example above we get all zeroes for the blocks which means they haven't been changed yet. But is this really the case? Didn't we format the era device with ext4? Surely that should have changed some blocks. Well, actually it did. And this is where the mysterious writeset array comes into play.

For now, let's drop our current snapshot and take a new one:

dmsetup message era 0 drop_metadata_snap

dmsetup message era 0 take_metadata_snapLet's look at the dump again:

root@alice:~# era_dump /dev/mapper/era-access

...

...

We have now advanced to the next era, which is 3. Also we now indeed see blocks that changed in the first era listed. When we compare the current era_array with the writeset of the previous dump, it becomes obvious that wherever the writeset listed a true before, we now have an era identifier of 1 in the era_array.

Taking a close look at the writeset of the previous dump, it actually specifies writeset era="2″. This means supposedly that this set of era values are still to be written in the specified era but contain changed blocks from some previous era. The purpose of writeset is not documented anywhere, but from the observations it's safe to assume that it kind of represents some caching or buffer swapping mechanism within dm-era. After all, this is essentially just a dump of the metadata, except for the XML-format it may still represent unedited raw data which in turn may or may not require special care in order to interpret correctly.

In conclusion, when we reach the next era and examine the era_dump output, we can't just trust the era_array if the writeset contains true entries, at least if we want the changed blocks of the directly preceding era. This reduces the usefulness of era_dump for live block tracking, especially in terms of performance when parsing and computing its output.

Luckily, era_dump is not the only tool, the thin-provisioning-tools package provides us with. There's also era_invalidate which is a much better choice if you're just after the changed blocks. Basically era_invalidate requires you to specify an era using the -written-since flag and will return a collection of blocks that have been changed since that era up to the current one.

Just imagine a timeline with steadily increasing eras:

|0|--#--|1|--#--|2|--#--|3|--#--|4|--#-->

| ^ ^ ^

'-(written since era 2)Inbetween the eras, block changes may happen (marked with the hash #). When you specify the --written-since era value, it will list a summary of all blocks that have got changed since that era, i.e. when following the timeline to the right.

Back to our example; we can get the list of the blocks changed during the ext4 formatting operation simply via:

root@alice:~# era_invalidate --written-since 1 /dev/mapper/era-access

Now let's drop our snapshot:

dmsetup message era 0 drop_metadata_snapWe can use dd to write some random data at a specific part of the block device:

dd if=/dev/urandom of=/dev/mapper/era bs=1M seek=60 count=40

This writes exactly 40 MB of random data starting at the 60th 1 MB block on our era device.

Let's have a look at the era_invalidate output again:

root@alice:~# dmsetup message era 0 drop_metadata_snap

root@alice:~# dmsetup message era 0 take_metadata_snap

root@alice:~# era_invalidate --written-since 1 /dev/mapper/era-accessIn comparison to the previous output, this now lists blocks ranging from 30 to 50 instead of only block 36. This is exactly where our data from the dd command ended up. Remember that we chose a dm-era granularity of 4096 sectors and each sector being 512 bytes, this yields 2 MB per block - which is why 40 MB of random data starting at 60 (as in the dd command) now end up as 20 blocks starting at 30.

Since we didn't change the--written-since parameter, it also additionally lists the changes done in era 1.

Now let's only list the changes done since the previous era. First we need to determine the era we are in now:

root@alice:~# dmsetup status era

0 524288 era 8 12/32 4 7Since we're in era 4 now (second to last digit), let's list all changes since era 3:

root@alice:~# era_invalidate --written-since 3 /dev/mapper/era-accessAnd this is exactly what has been changed during the dd command on block level.

Another nice feature of era_invalidate is that it automatically merges sequentially connected blocks into ranges in its output which can be used for sequential read operations instead of reading all blocks individually, vastly improving read performance.

Conclusiones

Using a sample setup based on a VM and two virtual hard drives, I demonstrated the setup and usage of the dm-era device mapper module to track changes of a block device on block level.

After we've now examined every part of the proposed dm-era setup in an extensive fashion, the following paragraphs serve as a summary of the essential steps to be taken.

Minimal setup

The following is a summary of the minimal steps for the proposed dm-era setup, parameterized with bash variables:

dmsetup create $META_DEV_NAME --table "0 $META_BLOCKSIZE linear /dev/sdb $META_OFFSET"

dmsetup create $ERA_DEV_NAME --table "0 $ORIGIN_BLOCKSIZE era /dev/mapper/$META_DEV_NAME $ORIGIN_DEV $GRANULARITY"

dmsetup create $ERA_ACCESS_NAME --table "0 $META_BLOCKSIZE linear /dev/mapper/$META_DEV_NAME 0" These three commands have to be used for the initial setup as well as after any subsequent reboot of the machine, since the device mapper structure created is not persistent.

For calculating the value of META_BLOCKSIZE, first calculate the byte size required. For a granularity of 4096, you may use the formula which I determined earlier:

metadata_size = round( (2.5E-6 * origin_size + 128) / 4 ) * 4This results in KB, so you simply need to multiply it with 1024 and then divide by 512 to get the amount of sectors as input for device mapper (as META_BLOCKSIZE above).

Replication routine

The following command sequence may serve as a template for creating a block tracking loop for replication mechanism purposes:

# take a metadata snapshot

dmsetup message $ERA_DEV_NAME 0 take_metadata_snap

# parse $ERA_NUM by parsing the second to last digit of:

dmsetup status $ERA_DEV_NAME

ERA_PREV=$(( ${ERA_NUM}-1 ))

# parse the list of changes blocks from:

era_invalidate --written-since $ERA_PREV /dev/mapper/$ERA_ACCESS_NAME

# drop metadata snapshot

dmsetup message $ERA_DEV_NAME 0 drop_metadata_snap

# (replicate the changed blocks according to the 'era_invalidate' output)

# (repeat)Important things to remember

The following list is a short summary about the most important findings and possible pitfalls to look out for:

- using one physical block device for both era origin and metadata device might be possible using LVM but is not possible with pure device mapper, as device mapper doesn't allow it [4]!

- always choose the era device the same size as your origin device, don't get it irritated by it being listed ontop of your metadata device in full size

- do not try to use your origin device directly, always use the created era device within /dev/mapper/ instead for read & write access (this includes reading blocks for replication purposes!)

- do not try to access the metadata device directly, create an identically sized linear mapping ontop of it for access (e.g. for tools like era_dump or era_invalidate)

- when using the metadata tools, always take a metadata snapshot before and correctly drop it afterwards

- prefer era_invalidate over era_dump when tracking changed blocks, because the latter may be difficult to parse because of the additional writeset that has to be taken into account

- always keep in mind that device mapper is working on sector level, any block sizes specified have to be multiplied by 512 bytes if you want to know the actual physical byte size!

- keep in mind that the device mapper structure applied to the physical block devices is temporary until reboot, it is necessary use the exact same dmsetup commands as initally to recreate the mapping after power-up

Limitations and issue regarding reboots

Keep in mind that the dm-era implementation is still considered an experimental feature of the Linux kernel. Judging from the very sparse documentation and the small number of mentions on the net, it isn't widely used either. So I suspect it's neither extensively tested nor is it suitable for productive use.

During my testing I also ran into a rather aggravating issue: the metadata doesn't survive reboots, although the "Resilience" section in the official docs [1] implies otherwise. Even when reapplying the same mappings after powering up the machine and thus restoring the same block device setup as before the shutdown, the era tools report the metadata being broken on subsequent usage attempts.

A quick fix is to zero out the sectors of the metadata device where the metadata target was located before applying the metadata and era device mappings again, which essentially leads to a full reset of the era history. For replication attempts this means there is no way to trace back any unsynced blocks from before the shutdown. A full resync in conjunction with era snapshotting could circumvent this until the replication has caught up again, albeit very costly.

However, I got confirmation on the dm-devel mailing list that this is indeed unintended behaviour and a patch [5] is already underway to fix this. Judging from the short tests I did with this kernel patch it does seem to fix this issue.

[1] https://www.kernel.org/doc/Documentation/device-mapper/era.txt

[2] http://blog.rackcorp.com/2016/03/dm-era-device-for-backups/

[3] https://wiki.gentoo.org/wiki/Device-mapper#Era

[4] https://www.redhat.com/archives/dm-devel/2014-September/msg00168.html

[5] https://www.redhat.com/archives/dm-devel/2017-April/msg00138.html

Acknowledgments

This work has been funded by the fast realtime project.

almost realtime is basis project in the project almost[6] (fast actuators, sensors and transceivers). almost started in 2013 and is being funded within the BMBF programme "Zwanzig20 - Partnership for Innovation", which is dedicated to the future related topic of innovative realtime systems. In total, the consortium counts 80 partners.

[1] https://www.kernel.org/doc/Documentation/device-mapper/era.txt

[2] http://blog.rackcorp.com/2016/03/dm-era-device-for-backups/

[3] https://wiki.gentoo.org/wiki/Device-mapper#Era

[4] https://www.redhat.com/archives/dm-devel/2014-September/msg00168.html

[5] https://www.redhat.com/archives/dm-devel/2017-April/msg00138.html

[6] http://de.fast-zwanzig20.de/fast-komplett/